Single Docker Container Generated 20 GB of Log. Here's How I Reclaimed Disk Space

Fri, 29 Aug 2025 12:40:58 +0530

I use Ghost CMS for my websites. Recently, they changed their self-hosting deployment recommendation to Docker instead of native installation.

I took the plunge and switched to the Docker version of Ghost. Things were smooth until I got notified about disk running out of space.

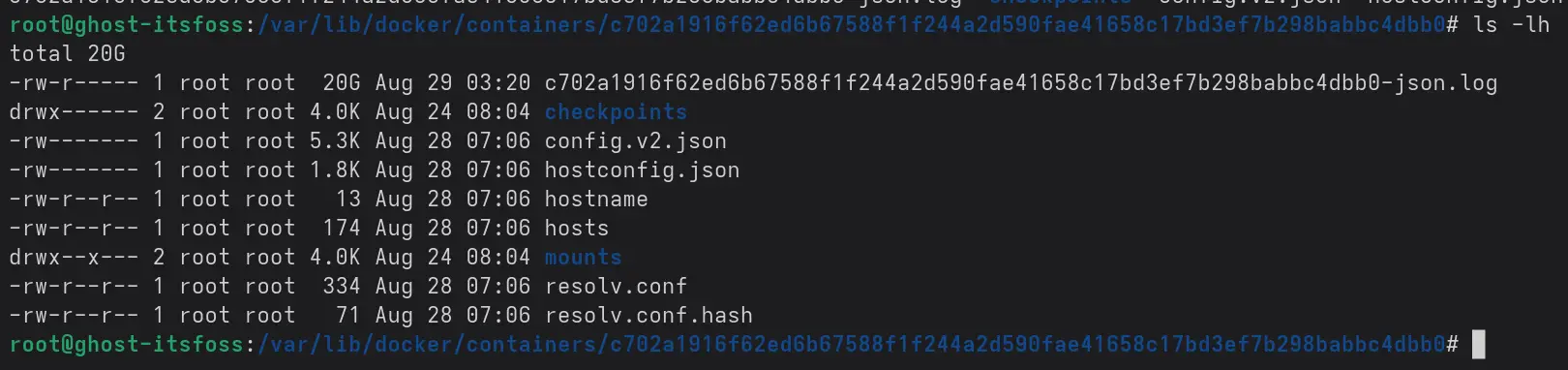

When I investigated to find which folders were taking the most space, I was surprised to see a Docker container taking around 21 GB of disk storage. And it was a container, not Docker volume or image or overlay.

Looking closer, I saw a single json log file that took 20 GB of disk space.

Unusual, right? I mean I was expecting logs to play a role here but I didn't expect it to find it here. I certainly needed to revise the Docker logging concepts.

Let me share how I fixed the issue for me.

Using log rotate in docker compose

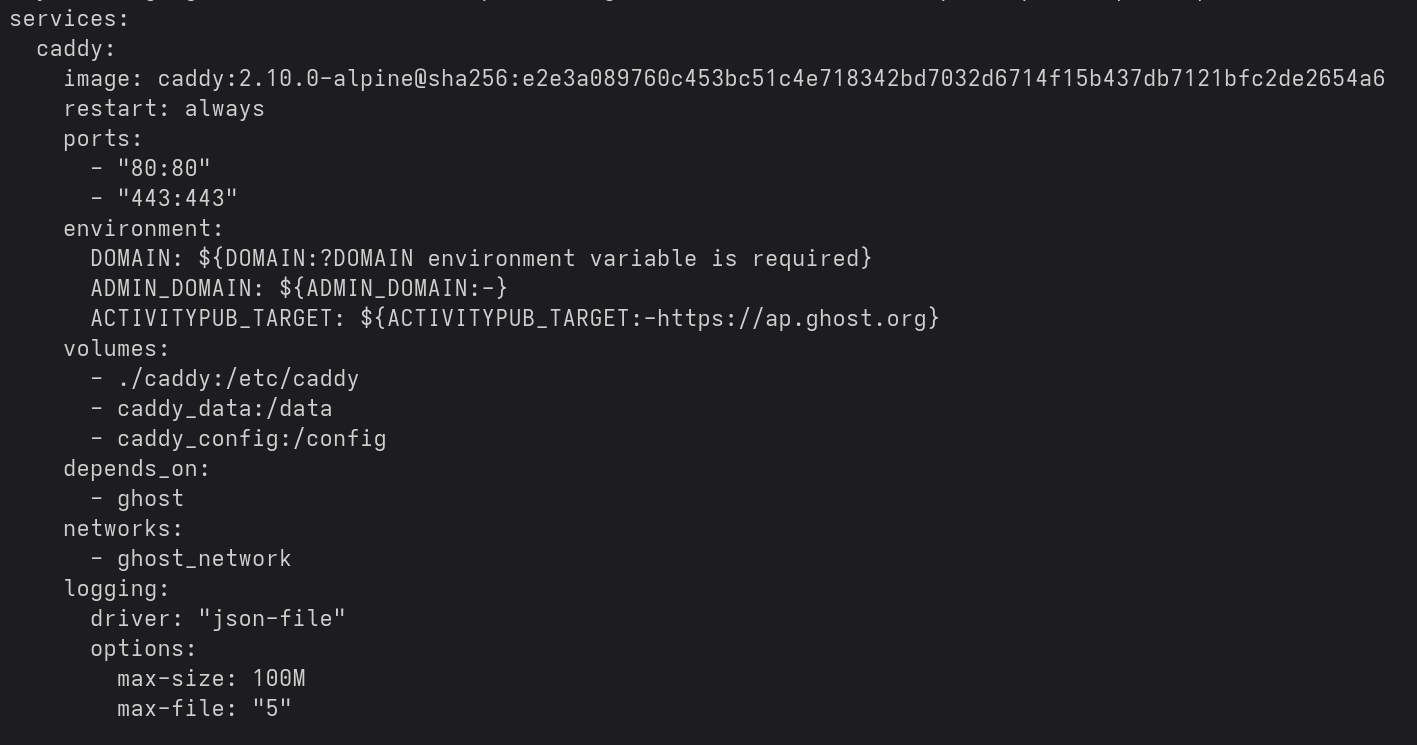

The solution was to define log rotation in the docker compose file in this manner:

logging:

driver: "json-file"

options:

max-size: 100M

max-file: "5"

Which basically tells the container to use json log files but not exceeding the 100 MB size. If it does, it will create a new log file. The total number of log files at a time won't exceed 5. You can change these value as desired.

But it is more than that. You need to identify which service/conatiner was generating the huge log files. Although, you could put it under all the containers and make it "log-eating-disk-space-proof".

My setup involves a docker compose file. I believe most deployments running on Docker use compose as it often takes more than one service as you often have a server, a database and more components.

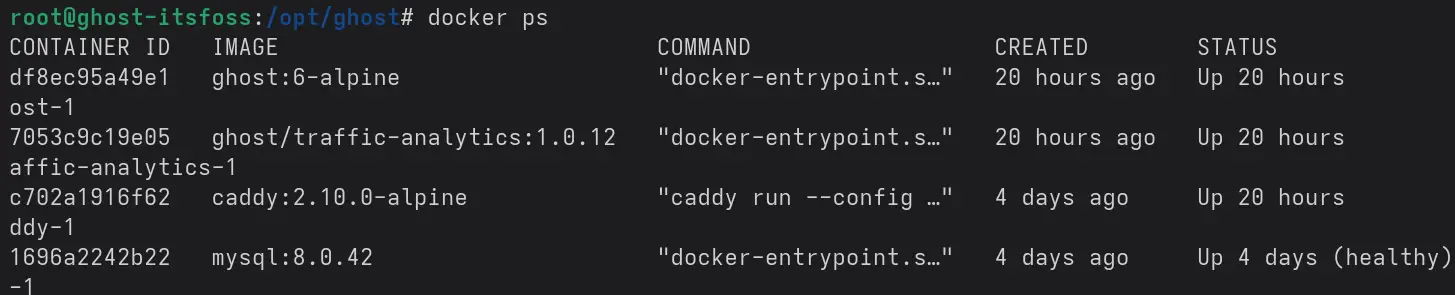

My first challenge was to find which Docker container was causing the issue. If there are multiple container running, you can identify which one is it by running:

docker psIt will show the contaier IDs. The first few digits of the folder in /var/lib/docker/container should match this container ID. This way, you identify which container it is that causes such huge log outputs.

As you can see in the two screenshots I shared, the problem was with c702a1916f62ed6b67588f1f244a2d590fae41658c17bd3ef7b298babbc4dbb0 which is from the caddy container with ID c702a1916f62.

So, I modified the compose.yml file with the section I shared earlier. Here's what the compose file looked now:

Restarted the service with:

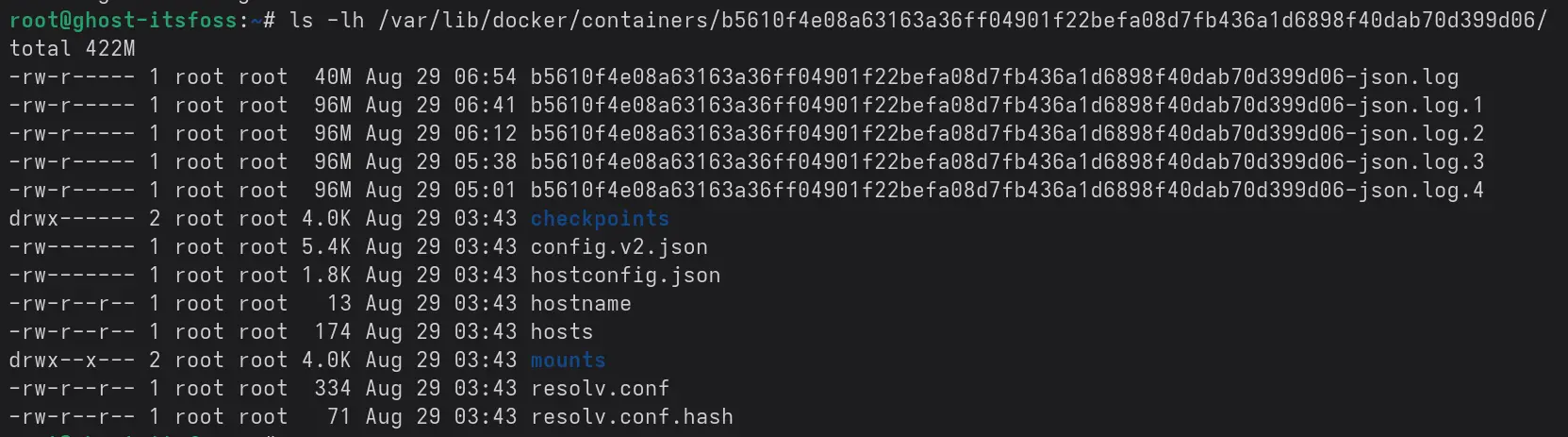

docker compose up -dThis had two impacts. Since new containers were created, the older container c702a1916f62 got destroyed and disk space was freed automatically. And the new container created smaller log files.

As you can see in the screenshot above, there are five log files with less than 100 MB in size.

What if you do not use compose?

If you are not using docker compose and has a single container, you can run the container with added parameters:

--log-opt max-size=100m --log-opt max-file=5This could be a pseudocode for reference:

sudo docker run -ti --name my-container --log-opt max-size=100m --log-opt max-file=5 debian /bin/bashSet it up for all the docker containers, system wide

You could also configure the Docker daemon to use the same logging policy by creating/editing /etc/docker/daemon.json file:

{

"log-driver": "json-file",

"log-opts": {"max-size": "100m", "max-file": "5"}

}Once you do that, you should restart the systemd service:

systemctl reload dockerI am sure there are other or perhaps better (?) ways to handle this situation. For now, this setup works for me so I am not going to experiment any further, specially not in a production environment. Hope it teaches you a few new things and helps you regain the precious disk space on your Linux server.

Recommended Comments